Maquiziliducks

The

Language of Evolution

Sean

D. Pitman M.D.

© June 2003

Last Updated: October 2007

|

Table of Contents

|

An Explosion in a Junkyard

A common argument put forward against the theory of evolution is that evolution

is statistically impossible, like an explosion in a junkyard creating a Boeing

747. Evolutionists ridicule this

argument and hold it up as proof of the ignorance of those who use such “strawman” arguments. Of course strawman arguments are those that attempt to attack a particular position or idea by

presenting a false idea as the real thing. In

other words, the opposing position is misrepresented by a weaker and easily

defeated strawman argument. So,

what is the “real man” of evolution?

It

is undeniable that the simplest living system far outshines the complexity of a

Boeing 747. Even the

"simplest" living cell easily does the job.

So, here we have our biological Boeing 747.

How did it get here from the surrounding pile of non-functional

biological “junk”? The odds of

such complexity suddenly assembling itself from the biochemical junkyard are

truly more remote than a Boeing 747 being created by an explosion in the city

dump. Of course evolutionists see

no problem here since they understand just how easily this problem is solved.

Obviously such complexity is not created suddenly, but gradually.

The energy of the explosion is not random and suddenly spent, but is

directed over long periods of time through a selection process called natural

selection. In this way, increasing

complexity is formed gradually, in a stepwise fashion.

So, to represent the theory of evolution as requiring the sudden formation of complexity is simply the building of a strawman that does not really exist. The true man of evolution is built upon the concept of gradual change. These changes are made possible because each tiny step is recognized as something functionally new and beneficial by nature. If the change is seen as reproductively advantageous by nature, this new step will be maintained. Conversely, all detrimental steps will be eliminated by nature. In this way natural selection guides the random changes in a non-random way. So, when challenged to explain how randomness can create complexity, evolutionists just smile a knowing smile and proceed to explain just how simple it all is. Evolutionists see this truth so clearly that it is really quite amusing for them to hear the confusion of creationists and design theorists who try to question the validity of such a simple and obvious fact of nature with arguments that reveal only ignorance as to the very process of evolution.

BioVisions at Harvard University

Cellular Visions: The Inner Life of a Cell

(Link)

Functionally Different

However,

there is just one little problem. Did

you notice it? The catch for the

theory of evolution is the word “functional.”

In order for changes to be selected for or against by nature, in a

positive non-random way, they must

be functionally different from the other previous functions.

Many

genetic changes are in fact "detrimental" or "neutral" changes

with regard to reproductive advantage. There are also portions of genetic elements

that have no known function at all. Changes to these genetic sequences are

also functionally neutral because they have the same non-function as they had

before. In order for nature to be able to "select" between two

difference sequences, they must be functionally different from each other; not

just sequentially different. The reason for this is because nature does

not analyze sequences. Nature only analyzes function. Once there are

functional differences between two sequences, nature selects for the one that

has more beneficial function, with regard to reproductive advantage, over the

other. So,

not only do changes have to be functionally different in some way to enter the

selection process, but, to avoid discard, the changes must be beneficial as

well.

So, can something that is at least as complex as a Boeing 747 be made in a stepwise fashion with each step being functionally beneficial? Before we answer this question, we must first check with the evolutionists to see if they agree that evolution must work this way. Do evolutionists agree that each step of evolution must be functionally beneficial?

Irreducible Complexity

In

order to answer this question one needs only look at how ardently evolutionists

oppose the idea of “irreducible complexity.”

This idea was proposed in 1996 by the biochemist Michael

Behe in his book, Darwin’s Black Box.1

His book ignited a fair bit of controversy and discussion, even in the

scientific community. The concept

of irreducible complexity is basically the idea that some types of function or systems of function

will not work at all, in their original capacity, if any one part is removed.

In other words, a minimum number of parts is required for a particular function

to work. The obvious assumption is that the existence of such a system

cannot be explained by any gradualistic mechanism of assembly such as the one

proposed by the theory of evolution. Behe

proposed several examples of irreducibly complex systems to include a bacterial

flagellum and a common mousetrap.

Evolutionists generally responded by denying that any irreducibly complex system exists in the natural world. They claimed that all functional systems can be broken down into smaller and yet smaller functional systems. By their adamant opposition to the idea of irreducibly complex systems, evolutionists seem to be admitting that each evolutionary step must be functionally beneficial. In fact they attempt to show how mousetraps and bacterial flagella can be formed gradually where each mutation is functionally beneficial. Obviously therefore, the maintenance of function and the generation of improved function, each step along the evolutionary pathway, is of key importance to the theory of evolution.

What many fail to realize is that all systems of function are irreducibly complex. Take Behe's mousetrap illustration for example. There are those who claim that all of the parts can be removed in various ways and the resulting mousetraps will still catch mice. Certainly there are a great many different ways to catch mice, but each way is irreducibly complex. Removing or changing this part or that part may still catch mice, but not in the same way. Each different way is uniquely functional as well as irreducibly complex. Even if the changes still catch mice, the unique function of the original mousetrap has still been lost. Of course, if a series of mousetraps could be built with each and every small change resulting is a better mousetrap, a very complex mousetrap could be evolved, one step at a time. However, the resulting complexity of the most complex mousetrap is still irreducible. There are still a minimum number of parts required for it to catch mice in that particular way.

Really then, an irreducibly complex system is not impossible to evolve. It happens all the time in real life. For example, single protein enzymes, like lactase and nylonase, have been shown to have evolved in real time in various bacteria. These enzymes are actually very complex. They are formed from even smaller subunits called amino acids. Long strings of these amino acids must be assembled in the proper sequence or the particular enzymatic function in question will not be realized. Certainly, to produce a function like the lactase function, there must be a minimum number of parts in the right order. The lactase function is therefore, "irreducibly complex". Of course, there are many different recognized as well as potential lactase enzymes out there in the vastness of sequence/structure space. Yet, all of them are irreducibly complex. They all require a minimum number of parts (amino acids) all working together at the same time to produce a specific type of potentially beneficial function - like the lactase function.

The question is, do these "irreducible" sequence and structural requirements prevent such systems from evolving? Surprisingly for some, the answer is no. On occasion, such single-protein enzymes have been shown to evolve de novo in certain bacteria. Really then, the fact that a function is irreducibly complex does not mean that it is beyond the reach of mindless evolutionary processes.

So, where is the problem? If irreducible systems of function can be evolved, and they clearly can be evolved, the case is closed is it not? Well . . . not quite.

More Bad than Good

It is interesting to note that the vast majority of the inheritable changes in biological systems are neutral or even harmful. As previously discussed, neutral changes cannot be selected for or against by nature and harmful changes (defined by being reproductively disadvantageous) are selected against. We must consider then only those very few mutations that actually result in some detectable, beneficial, phenotypic change.

To understand a little of the rarity of such "beneficial" mutations, consider that the overall mutation rate for an average living cell is on the order of one mutation per gene per 100,000 generations.9,10,11 Estimates for the average human genomic mutation rate run between 100 and 300 mutations per generation.13,14 In other words, a human child will have from 100 to 300 mutational differences from his or her parents. Of these mutations, only around 2% will be "functional" mutations. Of all functional mutations, most estimates use a ratio for beneficial vs. detrimental mutations of 1:1000.15,16 That means that it would take around 500 generations (about 10,000 years) to get just one beneficial mutation in a single family line.

So, from the perspective of a single human gene, it would take around 50 million generations (a billion years) to realize a single beneficial mutation in a single family line. During this time each gene in the family genome would have also been hit by around 1,000 detrimental mutations. No one seems to have a satisfactory explanation as to how all these detrimental mutations are eliminated fast enough in order for the very rare beneficial mutations to keep up. Unless beneficial mutations can keep up with the much higher detrimental mutation rate, extinction will occur in relatively short order. In fact, estimates show that in order to keep up with such a low ratio of beneficial vs. detrimental mutations, the average human woman would have to give birth to more than 20 children in each generation. Some authors suggest that a more realistic number is a reproductive rate of 148 children per woman per generation (link to discussion).

Why living things with relatively long generation times, like

humans and apes, have not yet gone extinct over the course of millions of years

remains a mystery. Consider an excerpt from a fairly recent Scientific

American article entitled, "The Degeneration of Man" :

According to standard population genetics theory, the figure of three harmful mutations per person per generation implies that three people would have to die prematurely in each generation (or fail to reproduce) for each person who reproduced in order to eliminate the now absent deleterious mutations [75% death rate]. Humans do not reproduce fast enough to support such a huge death toll. As James F. Crow of the University of Wisconsin asked rhetorically, in a commentary in ‘Nature’ on Eyre-Walker and Keightley's analysis: “Why aren't we extinct?"

Crow's answer is that sex, which shuffles genes around, allows detrimental mutations to be eliminated in bunches. The new findings thus support the idea that sex evolved because individuals who (thanks to sex) inherited several bad mutations rid the gene pool of all of them at once, by failing to survive or reproduce.

Yet natural selection has weakened in human populations with the advent of modern medicine, Crow notes. So he theorizes that harmful mutations may now be starting to accumulate at an even higher rate, with possibly worrisome consequences for health. Keightley is skeptical: he thinks that many mildly deleterious mutations have already become widespread in human populations through random events in evolution and that various adaptations, notably intelligence, have more than compensated. “I doubt that we'll have to pay a penalty as Crow seems to think,” he remarks. “We've managed perfectly well up until now." 17

Even

though I do not agree with much of Crow's thinking, I do agree with him when he

says that harmful mutations are accumulating in the human gene pool far faster

than they are leaving it. However, on what basis does he suggest that

harmful mutations spontaneously cluster themselves into “bunches” for batch

elimination from the gene pool of a given population?

Crow does not suggest a mechanism nor does it seem remotely intuitive as

to how this even might occur via naturalistic means.

Also,

Keightley is far too optimistic in my view. He assumes that because

evolution has happened in the past, that somehow evolution will solve the

problem. He fails to even consider the notion that perhaps humans, apes,

and other species with comparably long breeding times have been gradually

degenerating all along. Perhaps evolution only proceeds downhill

(devolution)? Perhaps mutations do not improve functions over time so much

as they remove functions over time in species with slow generation times?

Then again, even if the degenerative effects of mutations were somehow solved in populations with slow reproduction times, the addition of new information to the gene pool that goes beyond very low level functional systems involves the crossing of huge oceans of neutral or even detrimental sequence gaps that would take, for all practical purposes, forever to cross. It seems as though mutations, averaged over an extended period of time, tend toward loss and extinction rather than toward any sort of improvement or gain.

Neutral

Mutations

Of course, there are some who argue that functional mutations are not the only mutations that contribute toward phenotypic evolution. Many claim that even neutral mutations have a contribution. Motoo Kimura's work, "The Neutral Theory of Molecular Evolution", published in 1983, is often quoted in support of this assertion. However, Kimura's basic idea was that many genetic changes are in fact neutral and that these changes are beyond the detection of natural selection. He writes, "Of course, Darwinian change is necessary to explain change at the phenotypic level - fish becoming man - but in terms of molecules, the vast majority of them are not like that." 4 Kimura basically considered random genetic drifts in populations and did not primarily concern himself with phenotypic or functional genetic changes. Kimura's work was the first to seriously challenge the notion that all or even most mutational changes can be guided by natural selection.

Certainly then, it is an error to say that Kimura’s work with neutral evolution explains any sort of phenotypic evolution. Of course, Darwinian evolution is all about phenotypic or functional evolution. Kimura himself said that neutral evolution could not explain Darwinian evolution. Therefore, the neutral theory of evolution does not get Darwinian evolutionists out of the task of explaining how functionally different phenotypes evolve gradually.

Many continue to argue though that neutral mutations are not significant since they really do not interpose themselves between the various functions of living things to any significant degree. It is suggested that a series of mousetraps can be built from the most simple to the most complex with each tiny change being functionally beneficial the entire way. There really are no significant neutral or detrimental gaps to hinder this process. Therefore, no significant random drift is or was required to cross the distance between each beneficial change.

Antibiotic Resistance

Is it true? Is the argument that no significant neutral or detrimental gaps exist that must be crossed without the help of natural selection actually true?

Well, this argument is certainly true when it comes to the de novo evolution of antibiotic resistance in many different types of bacteria. It is quite interesting to note how bacteria evolve antibiotic resistance. All of them do it pretty much the same way. The method is really quite simple. It is based on the fact that antibiotics have very specific interactions with particular targets within bacteria. Many different point mutations to a particular target sequence will interfere with its interaction with the antibiotic. This interference results in bacterial resistance to that antibiotic. Such resistance develops very rapidly because a very large ratio of mutations to the target sequence have the potential to significantly interfere with antibiotic's interaction with the target. Because it is so likely to get at least one out of many potentially interfering mutations to occur in at least one member of a large population of several billion bacteria, the average time needed to evolve antibiotic resistance, regardless of the type of bacteria or antibiotic used, is usually measurable in days to weeks (under sublethal levels of antibiotic exposure).

Other bacteria have pre-formed genetic codes for certain enzymes that attack a particular antibiotic directly (i.e., penicillinase). Such enzymes are fairly complex and have not been shown to evolve directly in real observable time. However, those bacteria that have access to the pre-formed codes/genes for these enzymes usually regulate the production of these genes so that limited quantities are produced. When exposed to large quantities of the antibiotic, there simply isn't enough enzyme to deal with such large quantities of antibiotic and the bacterium dies. However, many different point mutations are capable of disabling the control of enzyme production so that massive quantities of the enzyme are produced. So, when very high levels of the antibiotic come around again, these bacteria are able to survive and pass on their poorly controlled enzyme gene to their offspring.

Humpty Dumpty Sat on a Wall . . .

Of course, in both of the above described methods of antibiotic resistance, the lucky bacteria gained the antibiotic resistance function through mutations that interfered with various interactions or previously functional systems. They did not make a new function from scratch, but only interfered with an existing function (i.e., the antibiotic-target interaction). This sort of disruption of a previous function or interaction is analogous to the famous children's story of Humpty Dumpty who fell off the wall. Remember that it was much easier to break Humpty Dumpty than it was to put him back together again. Every child knows this law of nature. It is far easier to destroy than it is to create.

Why is this? Why is it easier to destroy than it is to create from scratch? The reason is that there are a lot more ways to destroy than there are to create. There are a zillion more ways to break a glass vase than there are to fix or re-create that glass vase from the resulting shards of glass. The same thing holds true for the various functions in living things. They are like glass vases.

The evolution of antibiotic resistance was easy because it involved the breaking of an established function. However, many more functions exist in living things that cannot be created by breaking some pre-established function. The relatively simple functions of single protein enzymes are good example of this. There is certainly no way to get a function like the penicillinase enzyme to evolve by disrupting some other interaction. The same is true for the lactase and nylonase enzymes. The functions of these enzymes cannot be realized by interfering with other pre-established functions. The experiments of Barry Hall point this problem out quite clearly.

Single Protein Enzymes - Not So Simple

Barry Hall did a very interesting experiment with E. coli bacteria. E. coli bacteria naturally produce a lactase enzyme that hydrolyses the sugar lactose into the sugars glucose and galactose, which it can break down further to produce usable energy. What Hall did was to delete this lacZ gene so that these particular E. coli bacteria could no longer make the lactase enzyme. He then grew these bacteria on a lactose-enriched media. In a very short time (one generation), these bacteria evolved the ability to use lactose again. Upon further investigation, Hall found that a single point mutation to another very different gene resulted in the production of a lactase enzyme. Hall named this new gene the "evolved beta-galactosidase" gene (ebgA). What Hall did next is very interesting. He deleted both the lacZ and ebgA genes in the E. coli and then grew them on the same lactose-rich media. They never again evolved the ability to make a lactase enzyme despite thousands of generations of time, a high mutation rate, huge population numbers, and a selective lactose media that would have benefited them if they ever did evolve the lactase function again. Hall was mystified. He determined that these double mutant bacteria had "limited evolutionary potential."4 There are also many other types of bacteria that have never evolved the lactase function despite observation and records of them for over a million generations. Besides certain of Hall's E. coli colonies, these include certain members of Salmonella, Proteus, and Pseudomonas as well as many more.

So, what was the problem? These very same bacteria that could not evolve the lactase function over the course of tens of thousands of generations would have quickly evolved antibiotic resistance to any single antibiotic in just a few generations. Why then did they have such a problem with a relatively simple single-protein based enzyme function? The reason is that enzymatic functions are far more complex than the function of antibiotic resistance. In short, there are different levels of functional complexity. As it turns out, lower level functions are much easier to evolve Darwinian-style, exponentially easier, compared to systems of function that have higher minimum structural threshold requirements.

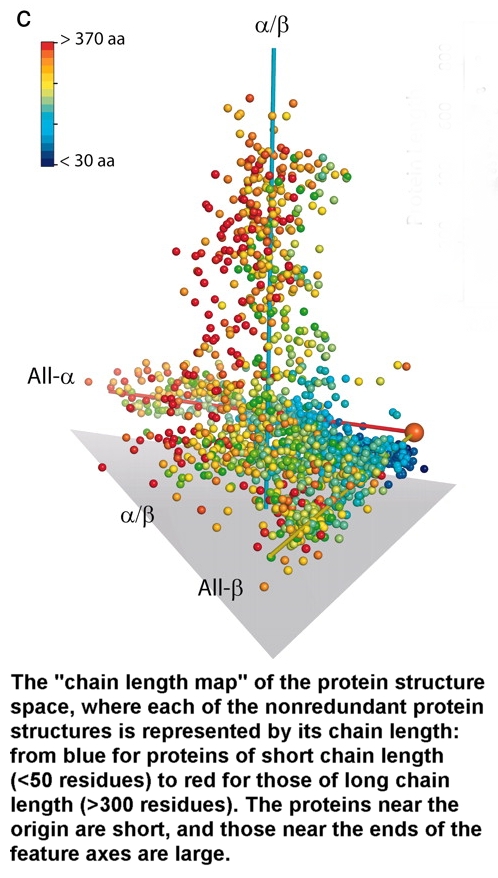

Consider that a single subunit of the lacZ enzyme is around 1,000 amino acids in size. The total number of different amino acid sequences of 1,000aa is around 10e1300. That is a huge number. The total number of particles in the entire universe is estimated to be around 10e80. Out of all these 10e1300 potential amino acid sequences out there, how many of them would have the lactase function? If there were a trillion trillion trillion trillion (10e47) lactase enzymes out there of 1,000aa or less, there would be over 10e1250 non-lactase sequences for every one lactase sequence. Finding any one of the trillions of functional lactase sequences at random would be like finding one particular proton in the universes of universes the size of our universe. Obviously though, the E. coli in Hall's experiment just so happened to evolve a very different useful lactase sequence in just one generation. Perhaps then the lactase function is a lot more "simple" or common in the potential of sequence/structure space? Perhaps the correct ratio would be closer to 1 in 10e23 (trillion trillion) instead of 1 in 10e1250?

This ratio is a lot more reasonable considering that the smallest useful lactase sequence known is around 380aa in size. One must also consider that the sequence requirements for a protein of this size are somewhat flexible. For example, if one were to change a 4 or 5 letters in this paragraph, it's essential meaning or function would most likely be maintained. However, there is a threshold beyond which too many changes would completely remove or distort its original meaning/function. The same thing is true of functional protein-based systems. All such systems have a certain degree of flexibility. This flexibility translates into an increased number of potentially beneficial sequences and/or structures in sequence/structure space that could produce a particular type of functional system - like one with the lactase ability.

In any case, even with a fair degree of sequence flexibility and reduced minimum size requirements, it is obvious that even the most simple single protein enzymes are far more complex than most forms of the function of antibiotic resistance. They are certainly a step up in "functional complexity" or "minimum structural threshold requirements". With this increased complexity comes a lot more difficulty for the mindless processes of Darwinian-style evolution to overcome. Antibiotic resistance is generally quite easy for mindless evolutionary processes to produce because it involves breaking something. However, these same evolutionary processes have great difficulty evolving a relatively simple lactase enzyme in thousands upon thousands of generations in huge populations?

The

problems only get worse from here on out. More complex bacterial

functions, such as the motility

function, require multiple proteins all working

together at the same time. If just one relatively simple enzyme is hard to

evolve, try calculating the time required for a multi-protein system to evolve. The

neutral gaps quickly get enormous. For example, if one trillion high-level

potentially beneficial systems (i.e., requiring a minimum of more than 1000

fairly specified amino acid residues) were at least 30 novel amino acid residue

changes away from the currently existing genetic real estate of a large bacterial colony with a steady state population of one trillion members, how

long would it take for that colony to evolve even one of these potentially beneficial

systems that exist in the potential of sequence/structure space? The gap

distance here is 1e39. If each one of the one

trillion bacteria mutated this particular sequence into a new sequence once

every day (without repeating any sequence along the way), it would take just

under 3 trillion years, on average, to cross a gap of just 30 non-selectable

steps.

Is

there evidence that such gaps exist? Well, if one considers various

systems with different minimum structural threshold requirements, one starts to

notice a pattern. The greater the minimum threshold requirements, the more

widely separated such systems are in the vastness of sequence space. In

short, there is a linear relationship between the minimum threshold requirements

and the number of required amino acid residue differences between a system and

the next closest beneficial system with a novel function. And, a linear

increase in the required residue differences (i.e., the "gap size")

translates into an exponential increase in the amount of time needed for a

particular population size to cross.

Is

there evidence that such gaps exist? Well, if one considers various

systems with different minimum structural threshold requirements, one starts to

notice a pattern. The greater the minimum threshold requirements, the more

widely separated such systems are in the vastness of sequence space. In

short, there is a linear relationship between the minimum threshold requirements

and the number of required amino acid residue differences between a system and

the next closest beneficial system with a novel function. And, a linear

increase in the required residue differences (i.e., the "gap size")

translates into an exponential increase in the amount of time needed for a

particular population size to cross.

Is the problem starting to become clear? If not, perhaps some illustration based on the way other language systems work may help clarify this issue further.

The Language of DNA

Living

biological systems are built using information that is written in the coded

order of four chemical "letters" labeled A, T, C, and G (Adenine,

Thymine, Cytosine, and Guanine). Strings of these letters are called,

"DNA" (DeoxyriboNucleic Acid). The

cellular structure (phenotype) is but a reflection of the information written

like a book in the coded language of its DNA.

As with other languages, the genetic code is arbitrary.

It codes for letters in another arbitrary language code of proteins.

Proteins are also built using chemical letters that are often assigned

meaning or function by the cell or system of cells that make and use them.

There is even a third language of sugars called glycans that is extremely

complex. All of these languages

work very much like any human language-system works.2

Now, what is the value of an arbitrary symbol in a language? What inherent meaning do the letters of the alphabet carry? By themselves, do they have any inherent meaning or function? Obviously not. Their meaning only comes after the meaning or function is arbitrarily assigned to the symbols by a language system or code. Before this, the symbols or letters were just like so many random scraps all jumbled together in an alphabet junkyard, like "maquiziliducks."

What

does the sequence of letters, “maquiziliducks” mean?

Maquiziliducks is not a word. The letters are all jumbled up and

mean nothing. But why? Why does this particular series of letters

have no meaning? There are some other words such as "xylophone"

that look just a strange

and yet xylophone means something in English. Obviously

the reason is that maquiziliducks has no meaning is because it is not part of

the English language system. It

gets no points in a game of Scrabble. Of course maquiziliducks might mean

something to an alien from the planet Quaziduck, but what good it

that to an Englishman in an English speaking environment?

So what’s the point? The point is that living biological systems are language systems just like the English language system or computer language systems. Like all other language systems, biological language systems use chemical letters and words that are defined by a limited cellular system and its interaction with its limited environment. Nothing functions by itself just as no letter or series of letters has inherent meaning.

Cats Turning Into Dogs

I have often heard that genetic evolution of new genes with new traits is easy. A common example used to demonstrate this sort of evolution is the series of words, "cat to hat to bat to bad to bid to big to dig to dog." This word evolution obviously shows a dramatic evolutionary sequence that changes meaning with each and every letter change. There are no "neutral" changes in this evolutionary sequence. It seems pretty convincing until we remember that there are is a lot higher ratio of three-letter words when compared to six-letter words. Three-letter words are relatively "simple" in structure and function. However, just as we discovered above, the neutral gaps get exponentially wider as we move up the ladder of functional complexity. Moving from the evolution of antibiotic functions to the evolution of simple enzymes is like going from the evolution of three-letter words to the evolution of ten-letter words.

A Million Monkeys . . .

Some try to explain this problem by saying that, "A million monkeys typing away will eventually create all the works of Shakespeare... if given enough time." However, some quick calculations will show that forming just a small phrase of Shakespeare such as, "Methinks it is like a weasel.", would take a million monkeys each randomly typing 100 five-letter words a minute, around one trillion trillion trillion years on average. Clearly then, random chance is powerless to explain the existence of complex language systems or the evolution of one system into another. There must be something that helps random chance along. But what is it?

Methinks it is Like a Weasel

Maybe it is not quite fair to compare the English language to the workings of DNA and proteins. Some evolutionists might balk at this, but many actually do accept the validity of such a correlation. This acceptance indicates that evolutionists do generally recognize the coded nature of genetic information systems. In fact, Richard Dawkins, perhaps the most famous living evolutionary biologist, also explains how evolution might work by appealing to examples of English-phrase evolution. His “Methinks it is like a weasel” phrase evolution is really quite famous.3 It has convinced many very intelligent people that the theory of evolution easily explains the formation of complex biological language systems. So, let's look a bit into exactly what Dawkins did.

What Dawkins did was to evolve his chosen target phrase with a computer algorithm that began with a random computer generated series of letters such as “MWR SWTNUZMLDCLEUBXTQHNZVJQF.” Dawkins begins with a non-functional meaningless phrase and then evolves “Methinks it is like a weasel” in very short order by having the computer select for those copies that are "closest" in sequencing to, "Methinks it is like a weasel." It seems almost miraculous to watch this evolution happen before one's very eyes on similar algorithms until one realizes that the computer is selecting based on genotypic similarity to an ideal genotypic sequence - - not on phenotypic changes in function. None of the intermediate steps in this example of sequence evolution have any phenotypic (English language) function whatsoever. The intermediate phrases are meaningless, much less beneficial. These intermediate phrases represent links in an evolutionary chain of function and yet none of the links make any sense in the English language.

Missing links in the fossil record are not the real problem for evolution. The really significant missing links are found in genetics. Where are these missing genetic links? Without them, all we have are huge neutral/non-beneficial gaps. Really, what real time examples are there to adequately support the theory of common descent via mindless Darwinian evolution beyond very low levels of functional complexity?

Common Examples of Evolution in Action

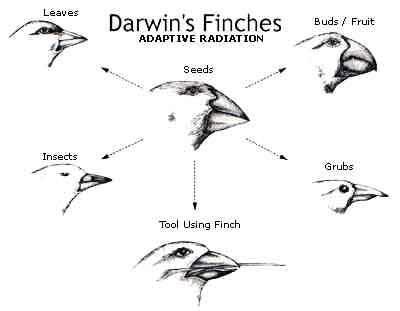

Common examples of evolution, to include the differences among the finches of

the Galapagos islands that Darwin noted, are really nothing more than variations

within a common unchanging or "static" gene pool. As early as 1865 Gregor

Mendel, the father of genetics, described such variations as the result of

the mixing and matching of constant traits or alleles. He described the

variations of pea plants by stating that, “Pea

hybrids form germinal and pollen cells that in their composition correspond in

equal numbers to all the constant forms resulting from the combination of traits

united through fertilization.”12 In

other words, all the various forms that exist in the pea plant were contained

within a common gene pool of trait options. Mendel found that the

phenotypic expression of individual plants was merely the reflection of a small

portion of a large but finite pool of options within a common gene pool.

Common examples of evolution, to include the differences among the finches of

the Galapagos islands that Darwin noted, are really nothing more than variations

within a common unchanging or "static" gene pool. As early as 1865 Gregor

Mendel, the father of genetics, described such variations as the result of

the mixing and matching of constant traits or alleles. He described the

variations of pea plants by stating that, “Pea

hybrids form germinal and pollen cells that in their composition correspond in

equal numbers to all the constant forms resulting from the combination of traits

united through fertilization.”12 In

other words, all the various forms that exist in the pea plant were contained

within a common gene pool of trait options. Mendel found that the

phenotypic expression of individual plants was merely the reflection of a small

portion of a large but finite pool of options within a common gene pool.

Therefore, although such

changes can be very dramatic, they need not be dependent upon mutation or the

acquisition of new genes or traits that were not already available. The

same can be said for other famous examples such as the shift in the color of

England's peppered

moth - - not to mention the fact that there have arisen a few questions as to

the methods used by Kettlewell in his original analysis of peppered moth pigment

"evolution".6,7,8 But, even if his methods were

completely flawless, such changes are only examples of phenotypic variance

within the same gene pool. In other words, the moths did not evolve a

color or pattern that was not already being expressed by moths within the same

gene pool. This type of phenotypic variation, although directed by natural selection, is

not an example of Darwinian-style genetic evolution since no new genes, systems or traits

with novel functions were added to

the pool.

Therefore, although such

changes can be very dramatic, they need not be dependent upon mutation or the

acquisition of new genes or traits that were not already available. The

same can be said for other famous examples such as the shift in the color of

England's peppered

moth - - not to mention the fact that there have arisen a few questions as to

the methods used by Kettlewell in his original analysis of peppered moth pigment

"evolution".6,7,8 But, even if his methods were

completely flawless, such changes are only examples of phenotypic variance

within the same gene pool. In other words, the moths did not evolve a

color or pattern that was not already being expressed by moths within the same

gene pool. This type of phenotypic variation, although directed by natural selection, is

not an example of Darwinian-style genetic evolution since no new genes, systems or traits

with novel functions were added to

the pool.

Other famous examples of evolution in action, such as flightless birds on windy islands, cave fish without eyes, and sickle cell anemia, among many others that are based on destruction or interference with existing genes and traits rather than on the evolution of new traits/functions from scratch. All of these examples involve either the loss of a gene or its regulation. Of course a change in the degree of function or the complete loss of a gene is far different from the evolution of a new and unique genetic function (remember Humpty Dumpty). Such genetic losses of and interference with established functions do have phenotypic effects that can be detected and selected for or against by nature, but nothing really new or unique is made that was not already there. Of course, there are in fact many examples detailing the evolution of unique genes producing uniquely functional products, but such examples of real evolution in action are clearly limited to the lowest rungs of the ladder of increasing functional complexity (i.e., The "limited evolutionary potential" of Hall's E. coli bacteria).

Crystals, Fractals, Chaos, and Complexity

Some might say that crystal formation is an example of the spontaneous formation of self-replicating complexity. However, crystal formation is not an example of a language system where the collective function of the parts is greater than the sum of all the parts. The information needed for the order of a crystalline structure is entirely contained by each molecule within that structure. This is not the case with the information contained in a molecule of human DNA. The information for the specific order of this molecule is not contained by any of the individual molecules that make up this strand of DNA. The information content is more complex than the molecules themselves. The information carried by DNA is greater than the sum of its parts. The same thing is true of the English language system. The letters in this sentence that you are reading do not know how to self-assemble themselves to form this sentence in a meaningful way. Clearly then, the information in a crystalline structure does not even come close to the most simple language system, much less the information content of DNA or living systems of function.

Of course, many crystalline structures appear to be very "complex", but they this complexity is based on the relatively low "information" content needed to form fractals. Very little information is needed to form such fractal-type structures, even though the final structure might appear to be very complicated indeed. But, this "complexity" isn't really "informational complexity", but is better termed "chaos" or "randomness". Fractals and other such structures are not more informationally complex than the information contained by the least common denominator. This is not like DNA and other symbol-based language systems where the information carried by the order of the parts is much greater than the total sum of the information carried inherently within the parts themselves.18

So Confusing!

It all seems quite confusing.

The theory of evolution is not so easy to understand as many have led us to

believe. Even those who have been

trained as evolutionists and who are experts in the field of genetics, such as

Michael Behe and others like Nobel Laureates Richard Smalley and Charles Hard

Townes, astronomer Sir Frederick Hoyle, mathematician Chandra Wickramasinghe,

and many others seemed confused (Power

Point Link). If

evolution is so simple to understand, then how can some of the most educated,

intelligent, rational scientists who have had the best and most rigorous

training that evolutionary thought and science has to offer... still not get it?

If anyone should be clear on how evolution works these scientists should

be. And yet some of these scientists challenging the very existence of genetic evolution?

Is the idea of irreducible complexity all that really crazy?

And yet, the truth of evolution is just so obvious to so many that those

who are skeptical of it must either be "stupid, ignorant, or insane"19;

or all of the above.

But what about all those maquiziliducks?

Behe, Michael J. Darwin’s Black Box, The Free Press, 1996.

Stryer, Lubert. Biochemistry, 3rd ed., 1988, pp. 153, 744.

Dawkins, Richard. The Blind Watchmaker, 1987.

B.G. Hall, Evolution on a Petri Dish. The Evolved B-Galactosidase System as a Model for Studying Acquisitive Evolution in the Laboratory, Evolutionary Biology, 15(1982): 85-150.

Kimura,

Motoo. The neurtral theory of molecular evolution, New Scientist, 1985,

pp41-46.

D.R. Lees & E.R. Creed, ‘Industrial melanism in Biston betularia: the role of selective predation’, Journal of Animal Ecology 44:67–83, 1975.

J.A. Coyne, Nature 396(6706):35–36.

The Washington Times, January 17, 1999, p. D8.

Dugaiczyk, Achilles. Lecture Notes, Biochemistry 110-A, University California Riverside, Fall 1999.

Ayala, Francisco J. Teleological Explanations in Evolutionary Biology, Philosophy of Science, March, 1970, p. 3.

Lewin, Benjamin. Genes V, Oxford University Press, 1994.

Mendel, Gregor. Experiments in Plant Hybridization. 1865.

Ninth

International Conference on Microbial Genomes, October 28th-November 1st,

2001. Gatlinburg, TN ( http://cgb.utmem.edu/meeting_reports/redwards_11_06_01.htm

)

http://genetics.hannam.ac.kr/lecture/Mgen02/Mutation%20Rates.htm

Beardsley, Tim, The Degeneration of Man, Scientific American, April, 1999, p32

Richard Dawkins, "Put Your Money on Evolution", Review of Johanson D. & Edey M.A., "Blueprints: Solving the Mystery of Evolution", in the New York Times, April 9, 1989, sec. 7, p. 34

. Home Page . Truth, the Scientific Method, and Evolution

.

. Maquiziliducks - The Language of Evolution . Defining Evolution

.

.

Evolving

the Irreducible

.

.

.

.

.

. DNA Mutation Rates . Donkeys, Horses, Mules and Evolution

.

.

. Amino Acid Racemization Dating . The Steppingstone Problem

.

.

. Harlen Bretz . Milankovitch Cycles

. Kenneth Miller's Best Arguments

Since June 1, 2002